Introduction

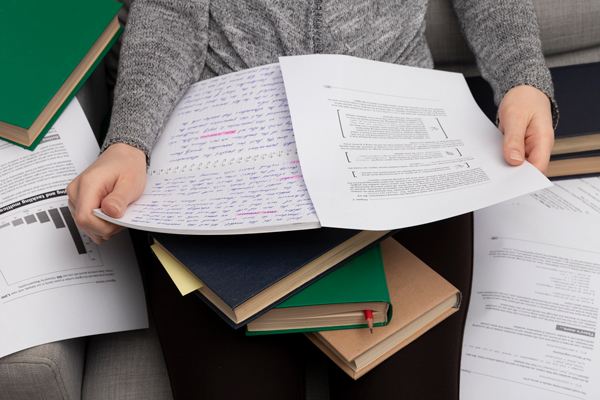

For many students, the dissertation introduction is the most challenging chapter to write. It accounts for just 10–15% of the total word count and it sets the direction for the entire project and strongly influences the examiner’s first impression. However, many students struggle to write an introduction that is focused and aligned with examiner expectations.

This guide is designed to solve that problem. Step by step, it explains how to structure a dissertation introduction that is clear, persuasive, and aligned with academic expectations from identifying the research gap to mapping out aims, objectives, and chapter structure. With this approach, you can transform the most daunting part of your dissertation into its strongest foundation.

1. Why the Introduction Matters

Your introduction chapter is the first impression examiners will have of your dissertation) or thesis it sets the tone for the whole project. A well-written introduction tells the examiner:

- This research area matters.

- There’s a clear gap that needs addressing.

- The student has a logical plan to investigate it.

If your introduction is clear and confident, examiners will read the rest of your work with trust. If it’s vague or incomplete, they may assume the same problems will appear in later chapters.

Now you have a thorough understanding why the introduction is important, the next step is to understand what is the core elements of your dissertation or precisely how to write the best introduction of dissertation.

2. The Core Elements of a Strong Introduction

A good introduction isn’t random background writing; it’s a structured chapter built from seven key parts. If you cover each of these, your examiner will have no doubt that your project is well thought through.

- Problem Overview (Background & Context) – set the stage with facts and explain the broader importance of your field.

- Current Issues (Significance & Gap) – show what’s missing in current research and why it matters to address it.

- Project Details (Scope & Approach) – give a high-level overview of what your study covers and how you’ll approach it.

- Aims & Objectives – state the overarching goal and break it into specific, measurable tasks.

- Research Question & Novelty – phrase your study as a clear research question and explain what makes it original.

- Feasibility, Commercial Context & Risk – show that your project is realistic, relevant, and aware of potential challenges.

- Report Structure – give readers a roadmap of what each chapter will cover.

Think of these as the building blocks of Chapter 1. Together, they transform your introduction into a logical, persuasive foundation for the rest of your dissertation/thesis.

3.1 Problem Overview (Background & Context)

This is the first building block of your introduction, where you establish the research landscape for the examiner. Here you need to contextualize your research area, demonstrate its significance, and identify opportunities for advancement rather than framing existing work as inherently flawed. Focus on how current approaches or understanding could be extended, refined, or applied in new ways. This section is your chance to convince the reader that your research addresses a meaningful gap or builds upon existing knowledge in a valuable way, making it worthy of investigation.

Here’s how to build it step by step:

1. Field + Importance

Here you should start broad and show why your research area is significant. Examiners want to see that you’re tackling a problem that matters at scale, not a niche or trivial one.

Name your field clearly (e.g., computer vision, cybersecurity, public health).

Show its scope and reach — where it applies and who it affects.

Use a fact, trend, or statistic to demonstrate scale and urgency.

Point to why now — recent growth, policy changes, or risks making the topic timely.

Example (Face-Age project):

“Accurately estimating age from faces is a major challenge in computer vision, affecting security, healthcare, content regulation, and businesses (Bekhouche et al., 2024). With digital interactions growing, shown by over 14.3 trillion photos taken worldwide in 2024 (Broz, 2022), the need for automatic age verification has increased.”

Why this works:

It places the study firmly in a recognized field (computer vision → age estimation).

It uses a statistic (14.3 trillion photos) to demonstrate scale.

It highlights multiple domains (security, healthcare, content regulation, business), proving broad importance.

Example (Cybersecurity):

“Cybersecurity threats cost businesses $8 trillion USD annually (IBM, 2023), with ransomware attacks increasing by 37% year-over year (Sophos, 2024). These breaches compromise national security, critical infrastructure, and personal data, making advanced threat detection a global priority.”

Why this works:

It uses hard currency figures ($8 trillion) to show economic scale

Cites growth trend (37% YoY increase) to emphasize urgency

Links to high-stakes domains (national security, infrastructure)

EXAMPLE (Public Health):

"Antimicrobial resistance (AMR) causes 1.27 million deaths globally each year (WHO, 2023), with 700,000 additional deaths linked to drug-resistant infections in low-resource settings. This threatens modern medicine and requires urgent innovation in diagnostic tools."

Why this works:

Uses mortality statistics (1.27 million deaths) to show human impact.

Highlights disparities (low-resource settings).

Ties to broader consequences (threat to modern medicine).

2. Technical Problems

After setting the field, you need to show what is failing technically in current systems. Be precise here; vague language like “inaccurate” won’t convince examiners.

Identify the specific errors or weaknesses (false positives/negatives, scalability limits, bottlenecks).

Explain the consequences of these failures (security risks, loss of reliability, weak performance).

Keep it grounded in practical impact, not just abstract flaws.

Example (Face-Age project):

“Nevertheless, current systems have serious problems. Technically, age prediction errors cause two issues: letting underage users access restricted platforms (false negatives) and blocking legitimate users (false positives), which weakens security and reliability (Nguyen et al., 2024).”

Why this works:

It identifies clear technical failure modes: false negatives and false positives.

It avoids vague language (“systems are inaccurate”) and shows how errors appear in practice.

It ties those errors to core stakes: weakened security and reliability.

EXAMPLE (Cybersecurity):

"Intrusion detection systems (IDS) generate 72% false alarms in enterprise networks (MITRE, 2024), primarily due to outdated signature databases and inability to analyze encrypted traffic. These false alerts overwhelm security teams, causing critical threats to be missed and response times to increase by 40%."

Why this works:

Quantifies failure rate (72% false alarms) with specific causes (signatures, encryption).

Shows operational impact (overwhelmed teams, missed threats).

Uses metrics (40% slower response) to demonstrate real-world consequences.

EXAMPLE (Public Health):

"Current diagnostic tools fail to detect early-stage tuberculosis in 45% of cases (WHO, 2023), due to low sensitivity in sputum tests and lack of rapid molecular diagnostics in rural clinics. This results in delayed treatment and 20% higher transmission rates in low-resource settings."

Why this works:

Specifies technical weaknesses (low sensitivity, lack of diagnostics).

Links to health outcomes (delayed treatment, higher transmission).

Uses context-specific data (rural clinics, low-resource settings).

3. Ethical/Fairness Issues

Here you should highlight that technical flaws often have real human consequences. Examiners will look to see if you’ve considered fairness and inclusivity.

Show how errors lead to bias or discrimination.

Identify which groups are most affected (minorities, certain ages, underrepresented users).

Use fairness-related terms: equity, trust, inclusivity, bias.

Prove your project addresses not just accuracy, but also ethical responsibility.

Example (Face-Age project):

“Ethically, the system works differently for different groups of people as it makes more mistakes when estimating the age of people from certain racial groups or very young or old individuals, leading to unfair treatment and bias in services (Guo et al., 2019).”

Why this works:

It highlights bias and proves the system isn’t equally fair.

It names vulnerable groups (racial minorities, very young/old users).

It uses fairness language (“unfair treatment and bias”) that resonates with both academic and ethical concerns.

EXAMPLE (Cybersecurity):

"Facial recognition systems misidentify Black and Asian faces 10-100 times more frequently than white faces (NIST, 2023), disproportionately flagging minorities as security threats. This algorithmic bias erodes trust in law enforcement and violates equity principles in public safety systems."

Why this works:

Quantifies disparity with specific metrics (10-100× higher misidentification).

Names affected groups explicitly (Black and Asian individuals).

Uses systemic fairness terms ("equity principles," "erodes trust").

Links technical flaws to institutional consequences (law enforcement mistrust).

EXAMPLE (Public Health):

"AI diagnostic tools underdetect skin cancer in patients with darker skin tones by 34% (JAMA, 2024), due to training data imbalances favoring light-skinned populations. This exacerbates health disparities and undermines inclusive healthcare delivery for marginalized communities."

Why this works:

Provides clinical impact data (34% underdetection rate).

Identifies vulnerable group with specificity (darker-skinned patients).

Connects technical cause (data imbalances) to ethical outcome (health disparities).

Uses equity-focused language ("inclusive healthcare," "marginalized communities".

4. Commercial/Regulatory Risks

Now you need to expand the stakes to business and legal dimensions. This shows your research has relevance beyond academia.

Link failures to financial risks (lost revenue, fines, compliance costs).

Mention laws/regulations in your domain (GDPR, HIPAA, CCPA).

Highlight the importance of customer or stakeholder trust.

Frame the issue as not just technical, but commercially critical.

Example (Face-Age project):

“Commercially, inaccurate age estimation leads to high costs from fines for breaking rules like GDPR/CCPA and lost revenue when customers leave due to failed age checks.”

Why this works:

It connects technical flaws to money and law , two powerful motivators.

It names specific regulations (GDPR, CCPA) to show the issue is real, not hypothetical.

It brings in customer trust as another factor, linking directly to business sustainability.

EXAMPLE (Cybersecurity):

"Data breaches cost enterprises an average of $4.35M per incident (IBM, 2023), with fines under GDPR reaching €20M or 4% of global revenue. These violations erode customer trust, causing 30% customer churn in affected sectors like finance and healthcare."

Why this works:

Quantifies financial impact ($4.35M per breach, €20M fines) with regulatory specificity.

Names concrete laws (GDPR) and penalty thresholds.

Links technical failures to business outcomes (customer churn) in high-stakes industries.

EXAMPLE (Public Health):

"Hospital data breaches incur HIPAA penalties averaging $2.2M (OCR, 2024), while misdiagnosis lawsuits cost providers $1.5B annually. These failures damage patient trust, leading to 15% reduction in preventative care visits and higher insurance premiums across healthcare systems."

Why this works:

Uses sector-specific financial data ($2.2M HIPAA penalties, $1.5B lawsuit costs).

Cites relevant regulations (HIPAA) and their enforcement scale.

Shows cascading commercial effects (reduced care utilization, premium increases).

5. Economic/Operational Challenges

Highlight the hidden costs and inefficiencies that weaken current approaches. This strengthens the case that the problem is systemic.

Point to resource costs (manual work, annotation, compute, energy).

Mention subjectivity or human error in current processes.

Stress how inefficiency makes solutions expensive and unsustainable.

Position your research as tackling these practical barriers.

Example (Face-Age project):

“Economically, the labor-intensive annotation process, compounded by human subjectivity and error, escalates development expenses by requiring extensive manual labeling of facial data (Md. Eshmam Rayed et al., 2024).”

Why this works:

It reveals the hidden cost: manual annotation.

It proves this isn’t only a technical bottleneck but an economic drain.

It shows that even human input is unreliable due to subjectivity and error.

EXAMPLE (Cybersecurity):

"Manual threat hunting consumes 3,200 hours annually per security team (Deloitte, 2024), with analyst fatigue causing 40% of critical alerts to be missed. This inefficiency forces enterprises to spend $1.2M yearly on outsourced SOC services while increasing breach response times by 65%."

Why this works:

Quantifies resource drain (3,200 hours/year) and human error impact (40% missed alerts).

Links inefficiency to direct costs ($1.2M outsourcing) and operational risks (65% slower response).

Positions automation as a critical solution.

EXAMPLE (Public Health):

"Paper-based patient records require 15 hours/week for manual data entry per clinic (WHO, 2023), with transcription errors affecting 18% of treatment decisions. These inefficiencies cost health systems $120B annually in administrative waste and delay diagnoses by 8 days on average."

Why this works:

Specifies operational burdens (15 hours/week) and error rates (18% affected decisions).

Quantifies systemic costs ($120B waste) and clinical impact (8-day delays).

Shows how manual processes undermine both efficiency and care quality.

6. Concluding Link

Finally, you need to tie everything together and prepare the reader for the next section. Keep this short but impactful.

Bundle the issues (technical + ethical + commercial + economic).

Show they collectively justify urgent research.

Transition by saying your project will address these problems, with details coming in Current Issues (Significance & Gap).

Example (Face-Age project):

“These connected challenges , technical weaknesses, unfairness, business risks, and high costs , show the urgent need for a better system. This research aims to fill this gap by creating an age estimation system that is accurate, fair, and practical, reducing operational risks and ensuring fair use in real-world applications.”

Why this works:

It summarises all dimensions of the problem in one neat line.

It introduces the research aim (better age estimation system).

It creates a smooth bridge to the next section (Current Issues / Significance & Gap).

EXAMPLE (Cybersecurity):

"These interconnected challenges — intrusion detection failures, algorithmic bias in facial recognition, escalating breach costs, and unsustainable manual threat hunting — demonstrate the critical need for integrated security solutions. This research develops AI-driven threat detection systems that address technical vulnerabilities, ethical concerns, and operational inefficiencies, with detailed analysis in subsequent sections."

Why this works:

Bundles cybersecurity-specific issues (detection failures, bias, costs, manual processes).

Positions solution as "integrated" (not siloed).

Uses transition language ("detailed analysis in subsequent sections").

EXAMPLE (Public Health):

"The convergence of diagnostic inaccuracies, healthcare disparities, regulatory penalties, and administrative inefficiencies creates an urgent crisis in health systems. This project proposes AI-assisted diagnostic tools that simultaneously improve accuracy, advance health equity, ensure compliance, and reduce operational waste — with implementation strategies examined in the following chapters."

Why this works:

- Links health-specific challenges (diagnostics, disparities, penalties, inefficiencies).

- Frames solution as multi-dimensional ("simultaneously improve accuracy, advance equity...").

3.1 Problem Overview complete (Background & Context) , Step Mapping (we will be showing it in the form of toolkit as done in crowjack example)

Step 1 : Field + Importance

“Accurately estimating age from faces is a major challenge in computer vision, affecting security, healthcare, content regulation, and businesses (Bekhouche et al., 2024). With digital interactions growing, shown by over 14.3 trillion photos taken worldwide in 2024 (Broz, 2022), the need for automatic age verification has increased.”

Step 2 : Technical Problems

“Nevertheless, current systems have serious problems. Technically, age prediction errors cause two issues: letting underage users access restricted platforms (false negatives) and blocking legitimate users (false positives), which weakens security and reliability (Nguyen et al., 2024).”

Step 3: Ethical/Fairness Issues

“Ethically, the system works differently for different groups of people as it makes more mistakes when estimating the age of people from certain racial groups or very young or old individuals, leading to unfair treatment and bias in services (Guo et al., 2019).”

Step 4 :Commercial/Regulatory Risks

“Commercially, inaccurate age estimation leads to high costs from fines for breaking rules like GDPR/CCPA and lost revenue when customers leave due to failed age checks.”

Step 5 :Economic/Operational Challenges

“Economically, the labor-intensive annotation process, compounded by human subjectivity and error, escalates development expenses by requiring extensive manual labeling of facial data (Md. Eshmam Rayed et al., 2024).”

Step 6 : Concluding Link

“These connected challenges , technical weaknesses, unfairness, business risks, and high costs , show the urgent need for a better system. This research aims to fill this gap by creating an age estimation system that is accurate, fair, and practical, reducing operational risks and ensuring fair use in real-world applications.”

3.2 Current Issues (Significance & Gap)

The Current Issues (sometimes called Significance & Gap) section follows naturally after the Problem Overview. Where the Problem Overview sets the stage and shows why the field is important, this section zooms in on the exact weaknesses of current approaches and explains why solving them matters.

Think of it as answering the examiner’s key question:

“What is missing in current research or practice, and why does your study need to exist?”

1. Identify the Core Weakness

Start by showing the single biggest limitation that holds your field back. Keep it sharp and convincing.

Name the central obstacle (e.g., dependence on large labeled datasets, limited generalisability).

Explain why this creates a serious bottleneck for progress.

Link it naturally back to the background so the flow continues.

Example (Face-Age project):

“The project addresses several critical gaps in current facial age estimation systems, primarily the persistent challenge of data scarcity where conventional supervised approaches require extensive labeled datasets that are too costly and time-consuming to compile, creating a significant bottleneck for scalable model development (Ma et al., 2024).”

Why this works:

Names the main gap → data scarcity.

Points to the cause → supervised approaches require too many labeled datasets.

Explains the impact → bottleneck for scalability.

EXAMPLE (Cybersecurity):

"Current cybersecurity frameworks face a critical limitation in real-time threat adaptation, where signature-based systems cannot detect zero-day attacks or polymorphic malware. This rigidity creates a 72-hour average detection delay (MITRE, 2024), allowing attackers to exfiltrate data before defenses activate, and directly undermines the core purpose of proactive security."

Why this works:

Identifies the core weakness → inability to adapt to real-time threats.

Quantifies the bottleneck → 72-hour detection delay.

Links to background consequences → data exfiltration and failed proactive security.

EXAMPLE (Public Health):

"Public health surveillance systems suffer from fragmented data integration across healthcare providers, laboratories, and government agencies. This approach creates critical blind spots in outbreak detection, delaying response times by 14 days on average (WHO, 2023) and severely limiting the effectiveness of epidemic containment strategies."

Why this works:

Names the central obstacle → fragmented data integration.

Explains the impact → 14-day detection delays and containment failures.

Connects to field significance → undermines epidemic response (referencing public health priorities).

2. Expose Additional Shortcomings

One problem rarely stands alone. Strengthen your argument by pointing out secondary flaws that pile onto the main issue.

Mention underused opportunities (like abundant unlabeled data left idle).

Highlight systemic inefficiencies that reinforce the primary gap.

Example (Face-Age project):

“This limitation is made worse by the abundance of unlabeled facial images spreading across digital platforms, resources that remain unused due to the lack of methods to effectively use them for training (Dwivedi et al., 2021).”

Why this works:

Creates a contrast: labeled data is scarce but unlabeled data is abundant.

Shows there is untapped potential.

Frames the gap as not just scarcity but also inefficient use of available data.

EXAMPLE (Cybersecurity):

"This rigidity is exacerbated by underutilized threat intelligence sharing across organizations, where 85% of attack indicators remain siloed in private databases (ENISA, 2024). Fragmented security ecosystems prevent collective defense models, while legacy system integration costs deter innovation in adaptive architectures."

Why this works:

Stress that the field is missing chances to innovate by leaving these flaws unresolved.

Identifies wasted resources (85% of indicators unused).

Highlights systemic inefficiency (fragmented ecosystems, legacy costs).

Shows missed opportunities (collective defense, adaptive innovation).

EXAMPLE (Public Health):

"These silos are compounded by untapped mobile health data from 5.2 billion global users (ITU, 2023), which could enable real-time disease tracking but remains disconnected from formal surveillance. Bureaucratic data governance barriers and interoperability failures between health systems prevent integrated early-warning solutions."

Why this works:

Highlights underused opportunity (5.2B users' mobile data).

Exposes systemic barriers (bureaucracy, interoperability failures).

Stresses missed innovation potential (real-time tracking, integrated solutions).

3. Address Fairness and Reliability

Don’t let your discussion stay purely technical. Examiners want to see that you’ve thought about the social consequences of weak systems.

Show how models perform unevenly across different groups (age, gender, ethnicity).

Emphasise how this undermines fairness, inclusivity, and trust.

Position your study as one that aims for reliable outcomes for all users, not just the majority.

Example (Face-Age project):

“Additionally, existing models show clear demographic bias, with performance differences across age groups, ethnicities, and genders that hurt fairness and reliability in real-world applications, particularly for underrepresented populations (Guo et al., 2019).”

Why this works:

Explicitly mentions bias and inequality.

Identifies specific affected groups (age, ethnicity, gender).

Links bias to reliability in real-world use, making the stakes practical.

EXAMPLE (Cybersecurity):

"Intrusion detection systems exhibit 28% higher false positive rates for network traffic from Global South regions (IEEE Security, 2024), disproportionately flagging legitimate cross-border transactions as malicious. This erodes trust in international digital commerce and forces minority-owned businesses to absorb $3.2B annually in verification delays."

Why this works:

Quantifies disparity (28% higher false positives) with geographic specificity.

Shows real-world consequences (verification costs, commerce disruption).

Links technical bias to economic harm for marginalized groups.

EXAMPLE (Public Health):

"Diagnostic AI tools demonstrate 22% lower accuracy for patients with co-occurring conditions (Lancet Digital Health, 2023), systematically underprioritizing elderly and disabled populations. This compromises health equity by delaying critical interventions for society's most vulnerable while over-representing healthy demographics in training data."

Why this works:

Uses clinical metrics (22% lower accuracy) with population specifics.

Identifies high-stakes consequences (delayed interventions for vulnerable groups)

- Connects technical bias to systemic health inequity.

4. Point Out Practical Barriers

Bring the conversation down to earth by showing why current approaches don’t translate well into practice.

Note issues like computational cost, energy demand, and long training times.

Mention deployment limits: too heavy for real-time use or edge devices.

Underscore that solutions confined to labs will not scale to real-world adoption.

Example (Face-Age project):

“Computational inefficiencies further limit deployment, as top deep learning models demand substantial resources, making their use in edge devices or real-time systems difficult (Feng et al., 2025).”

Why this works:

Names the practical barrier → resource demands.

Specifies contexts (edge devices, real-time).

Expands the issue from accuracy to feasibility and scalability.

EXAMPLE (Cybersecurity):

"Next-generation encryption algorithms require 40× more computational power than current standards (NIST, 2024), consuming 85% of server resources and increasing energy costs by $2.1M annually per data center. These demands prevent deployment on IoT devices with limited processing capabilities, leaving critical infrastructure vulnerable."

Why this works:

Quantifies resource drain (40× power, 85% server resources) and costs ($2.1M/year).

Identifies deployment limits (IoT devices).

Links lab-only solutions to real-world risks (infrastructure vulnerability).

EXAMPLE (Public Health):

"AI-driven diagnostic platforms need 12-hour training cycles on specialized hardware (Nature Medicine, 2023), consuming 300kW per model – prohibitively expensive for rural clinics. This confines advanced diagnostics to urban hospitals, exacerbating healthcare disparities in low-resource regions."

Why this works:

Specifies operational barriers (12-hour cycles, 300kW energy use).

Highlights deployment constraints (rural clinics can't afford).

Shows real-world impact (urban-rural healthcare divide).

5. Reveal the Missing Piece

Now it’s time to set up your originality. What have other researchers not yet attempted, combined, or tested? This should lead directly into your project.

Identify techniques or integrations that remain unexplored.

Show that existing studies haven’t managed to bridge these areas effectively.

Make it clear this is the gap your project is designed to close.

Example (Face-Age project):

“The project will also explore the gap in using transfer learning with semi-supervised techniques; while pre-trained CNNs like ResNet and EfficientNet offer strong feature extraction capabilities (Randellini, 2023), current frameworks fail to best combine these with pseudo-labeling and consistency regularization to maximize the use of both labeled and unlabeled data for continuous age prediction (He et al., 2022; Jo, Kahng and Kim, 2024).”

Why this works:

Recognises strengths of current methods (transfer learning).

Points to what’s missing (integration with SSL).

Defines the innovation angle your research will take.

EXAMPLE (Cybersecurity):

"While behavioral analytics excel at detecting insider threats (MITRE, 2023) and quantum encryption provides theoretically unbreakable communication (NIST, 2024), no existing framework integrates these approaches to address real-time threat adaptation. Current research treats these as siloed solutions, missing the opportunity to create adaptive security systems that dynamically adjust defenses based on behavioral patterns while maintaining quantum-resistant encryption."

Why this works:

Identifies unexplored integration (behavioral analytics + quantum encryption).

Shows existing studies treat them as siloed (not bridged).

Positions the gap as a barrier to adaptive security systems.

EXAMPLE (Public Health):

"Although genomic surveillance effectively tracks pathogen evolution (Nature, 2023) and mobile health (mHealth) apps provide real-time symptom reporting (Lancet, 2024), no integrated system combines these with environmental sensor data to predict outbreaks. Current approaches operate in isolation, preventing the development of comprehensive early-warning platforms that could correlate pathogen mutations with population mobility and environmental conditions."

Why this works:

Names uncombined techniques (genomic surveillance + mHealth + environmental sensors).

Highlights fragmentation in existing research (operating in isolation).

Defines the gap as a barrier to predictive outbreak platforms.

6. Wrap Up with a Forward Look

End the section with a short but confident summary that points the reader to your solution.

Bundle the problems into a coherent cluster (technical, fairness, efficiency, underuse).

Stress that they collectively demand fresh research.

Transition smoothly to Project Details, where you’ll outline how your study responds.

Example (Face-Age project):

“These interconnected challenges , data dependency, bias, computational demands, and under-use of unlabeled resources , collectively hinder the development of accurate, fair, and commercially viable age estimation systems. The project will target these gaps by proposing a unified semi-supervised regression framework, with detailed solutions and their commercial implications to be examined in subsequent chapters.”

Why this works:

Summarises multiple issues clearly.

Connects them to the need for accuracy, fairness, and commercial viability.

Hints at your solution (semi-supervised regression framework) while promising details later.

EXAMPLE (Cybersecurity):

"These converging barriers — signature-based detection limitations, algorithmic bias in facial recognition, unsustainable manual threat hunting, and fragmented intelligence sharing — collectively undermine cybersecurity resilience. This research addresses these gaps through an integrated AI-driven threat detection framework, with implementation strategies and scalability analysis detailed in the following sections."

Why this works:

Bundles cybersecurity-specific issues (detection limits, bias, manual processes, fragmentation).

Emphasizes systemic impact ("undermine cybersecurity resilience").

Uses transition language ("implementation strategies... detailed in following sections").

EXAMPLE (Public Health):

"The intersection of diagnostic inaccuracies, healthcare disparities, administrative inefficiencies, and fragmented data integration creates an urgent crisis in public health response. Our project confronts these challenges through an AI-assisted surveillance platform that unifies genomic, mobile, and environmental data streams, with design specifications and equity-centered deployment examined in subsequent chapters."

Why this works:

Clusters health-specific challenges (inaccuracies, disparities, inefficiencies, fragmentation).

Positions solution as unifying ("unifies genomic, mobile, and environmental data").

Explicitly signposts forward ("design specifications... examined in subsequent chapters").

In summary

The Current Issues section is where you:

Name the main gap (data scarcity).

Reinforce it with unused resources (unlabeled data).

Add fairness concerns.

Show computational barriers.

Expose the solution gap (transfer + SSL not combined).

Conclude by bundling issues and signposting your framework.

Word count tip: Aim for 300–450 words. Keep it concise but layered , every gap should be distinct and clearly linked to your project.

3.2 Current Issues (Significance & Gap) , Step Mapping(Tooltip)

Step 1 : State the primary gap clearly

“…primarily the persistent challenge of data scarcity where conventional supervised approaches require extensive labeled datasets that are too costly and time-consuming to compile, creating a significant bottleneck for scalable model development (Ma et al., 2024).”

Step 2 : Add the reinforcing limitation (unused unlabeled data)

“This limitation is made worse by the abundance of unlabeled facial images spreading across digital platforms, resources that remain unused due to the lack of methods to effectively use them for training (Dwivedi et al., 2021).”

Step 3 : Highlight fairness and bias issues

“Additionally, existing models show clear demographic bias, with performance differences across age groups, ethnicities, and genders that hurt fairness and reliability in real-world applications, particularly for underrepresented populations (Guo et al., 2019).”

Step 4 : Point to computational/efficiency problems

“Computational inefficiencies further limit deployment, as top deep learning models demand substantial resources, making their use in edge devices or real-time systems difficult (Feng et al., 2025).”

Step 5 : Expose the underexplored solution gap

“The project will also explore the gap in using transfer learning with semi-supervised techniques; while pre-trained CNNs like ResNet and EfficientNet offer strong feature extraction capabilities (Randellini, 2023), current frameworks fail to combine these with pseudo-labeling and consistency regularization to maximize use of both labeled and unlabeled data (He et al., 2022; Jo, Kahng and Kim, 2024).”

Step 6 : Bundle the issues and point forward

“These interconnected challenges , data dependency, bias, computational demands, and under-use of unlabeled resources , collectively hinder accurate, fair, and commercially viable age estimation systems. The project will target these gaps by proposing a unified semi-supervised regression framework, with detailed solutions and their commercial implications to be examined in subsequent chapters.”

3.3 Project Details (Scope & Approach)

A short, high-level overview of what you’re building, why you’re building it, and how you’ll approach it’s just enough for the examiner to see focus and feasibility. It’s not methods; it’s the preview.

What to include:

Overall goal (1–2 lines): what you’re developing and which headline problems it targets.

Core aim (1 line): the central ambition (e.g., accuracy + lower label dependency).

Key features (2–4 items): the defining ingredients of your solution.

Priorities (2–3): what you optimise for (e.g., data efficiency, fairness, scalability).

Management & validation (1–2 lines): how you’ll structure work and verify results.

Forward pointer (1 line): where fuller design/feasibility details appear later.

Target length: 150–250 words (tight but informative).

1. State the Overall Goal

Start with a clear opening line that tells the reader what kind of project you’re building. This is your “headline statement.”

Frame it as: “This project develops/creates/designs…”.

Mention the type of framework/model/system you’re working on.

Connect it back to the central problem or gap.

Example:

“This project develops a semi-supervised regression model to address critical challenges in facial age estimation, focusing on mitigating data scarcity and demographic bias.”

Why this works:

Identifies the deliverable (semi-supervised regression model).

Pins down two headline challenges (data scarcity, bias).

Signals immediate relevance without drifting into method detail.

EXAMPLE (Cybersecurity):

"This project creates an adaptive AI-driven threat detection framework to overcome limitations in real-time cybersecurity, specifically targeting zero-day attack prediction and cross-organizational intelligence integration."

Why this works:

Clearly defines the deliverable (adaptive AI-driven framework).

Highlights two critical cybersecurity gaps (zero-day prediction, intelligence integration).

Avoids technical jargon while establishing purpose.

EXAMPLE (Public Health):

"This project designs an integrated early-warning surveillance platform that unifies genomic, mobile, and environmental data streams to address systemic barriers in outbreak prediction and health equity."

Why this works:

Specifies the solution type (integrated surveillance platform).

Targets two public health priorities (outbreak prediction, equity).

Emphasizes multi-source data integration as the innovation core.

2. Clarify the Core Aim

Now narrow down the big goal into the central ambition of your work. This should read like the project’s guiding purpose.

Explain the primary aim in one or two sentences.

Show what makes it essential (accuracy, fairness, scalability, cost-effectiveness).

Keep it broad enough to cover all objectives, but sharp enough to be meaningful.

Example : “The core goal is to create a robust system that accurately estimates age from facial images while minimizing reliance on exhaustively labeled datasets.”

Why this works:

Balances performance (accuracy) with efficiency (label economy).

Reads as a north star you can evaluate against later.

EXAMPLE (Cybersecurity):

"The core goal is to develop an adaptive threat detection system that identifies zero-day attacks in real-time while reducing false positives by 70% and operating within existing network infrastructure constraints."

Why this works:

Balances performance (real-time detection) with operational efficiency (70% fewer false positives).

Addresses critical industry constraints (existing infrastructure).

Provides measurable targets for evaluation.

EXAMPLE (Public Health):

The core goal is to establish an integrated surveillance platform that predicts disease outbreaks 14 days earlier than current systems while ensuring equitable access across low-resource settings and reducing administrative burdens by 40%."

Why this works:

Balances performance (14-day earlier prediction) with equity (access for low-resource settings).

Quantifies operational improvement (40% burden reduction).

Addresses both technical and social dimensions of public health.

3. Describe the Key Features

Here you need to highlight what defines your project. These are the building blocks that give your approach its identity.

Mention the methods or models (e.g., transfer learning, SSL, regression).

Identify distinctive aspects (e.g., fairness checks, generalisation across datasets).

Link them back to the issues identified in 3.2 — so features clearly respond to gaps.

Example:

“Key features include leveraging unlabeled data to enhance generalization, implementing transfer learning with pre-trained architectures (e.g., ResNet, EfficientNet), and ensuring equitable performance across diverse age groups and ethnicities.”

Why this works:

Shows how you’ll reach the aim (unlabeled data + transfer).

- Grounds claims with well-known backbones (ResNet/EfficientNet).

Keeps fairness explicit as a product requirement, not an afterthought.

EXAMPLE (Cybersecurity):

"Key features include federated learning for cross-organizational threat intelligence sharing, quantum-resistant encryption modules for future-proofing communications, and adaptive behavioral baselining that dynamically adjusts to zero-day attack patterns while reducing false positives."

Why this works:

Addresses earlier gaps (fragmented intelligence, quantum vulnerability, real-time adaptation).

Uses specific techniques (federated learning, quantum-resistant encryption).

Links features to operational outcomes (reduced false positives).

EXAMPLE (Public Health):

"Key features include genomic sequencing integration for pathogen tracking, mobile health API connectivity for real-time symptom reporting, and edge-computing architecture for low-resource deployment, all while incorporating bias-correction algorithms to ensure equitable diagnostic accuracy."

Why this works:

Directly responds to identified gaps (fragmented data, rural deployment limitations, diagnostic bias).

Specifies technical approaches (genomic integration, edge-computing).

Maintains equity focus through bias-correction as a core feature.

4. Highlight Priorities

Examiners like to see that you’ve thought about trade-offs. This is where you tell them what your project values most.

List 2–3 research priorities (e.g., efficiency, bias mitigation, scalability).

Explain briefly why these matter in your context.

Acknowledge implicitly that you’re not trying to solve everything.

Example

“This research firstly prioritizes data efficiency, reducing dependency on costly manual annotation via SSL; secondly prioritizes bias mitigation, addressing disparities in underrepresented demographics; and lastly prioritizes scalability, designing a framework adaptable to real-world applications like age-restricted access control and personalized healthcare.”

Why this works:

Converts ideals into operational priorities (efficiency, fairness, scale).

Ties priorities to practical contexts, signalling applied relevance.

EXAMPLE (Cybersecurity):

"This project first prioritizes real-time detection capability, enabling immediate identification of zero-day attacks; second prioritizes cross-organizational integration, facilitating collective defense against sophisticated threats; and third prioritizes scalability, ensuring compatibility with legacy infrastructure while acknowledging trade-offs in exhaustive attack vector coverage."

Why this works:

Links priorities to critical cybersecurity outcomes (real-time detection, collective defense).

Addresses practical constraints (legacy infrastructure compatibility).

Implicitly acknowledges scope limitations ("trade-offs in exhaustive attack vector coverage").

EXAMPLE (Public Health):

"This research primarily prioritizes early detection speed, aiming to identify outbreaks 14 days faster than current systems; secondarily prioritizes health equity, ensuring diagnostic accuracy across low-resource settings; and tertiarily prioritizes operational efficiency, reducing administrative burdens by 40% while accepting limitations in rare disease coverage."

Why this works:

Quantifies priorities (14-day faster detection, 40% burden reduction).

Centers equity as a core priority (not an afterthought).

Explicitly notes trade-offs ("accepting limitations in rare disease coverage").

5. Note Project Management & Validation

This step reassures the examiner that your project is practical and structured. You’re not just describing ideas — you have a plan to test them.

Mention the methodological approach (e.g., iterative refinement, experimental evaluation).

Name the datasets/benchmarks you’ll use.

Show that you’ve considered ethics and compliance from the start.

Example

“Management follows a structured methodology, emphasizing iterative refinement of model architecture, validation against benchmark datasets (e.g., UTKFace, FG-NET), and ethical compliance.”

Why this works:

Signals process discipline (iterative refinement).

Shows external validity via recognised benchmarks.

Flags responsible practice (ethics).

EXAMPLE (Cybersecurity):

"Management employs an agile development cycle with bi-weekly threat simulation testing, validation against MITRE ATT&CK and NIST Cybersecurity Framework benchmarks, and adherence to GDPR/CCPA compliance protocols throughout the system lifecycle."

Why this works:

Specifies methodology (agile development, bi-weekly testing).

Uses industry-standard benchmarks (MITRE ATT&CK, NIST).

Integrates compliance from inception (GDPR/CCPA protocols).

EXAMPLE (Public Health):

"Management utilizes a participatory action research approach with quarterly stakeholder reviews, validation against WHO Global Health Observatory datasets and CDC epidemic thresholds, and IRB-approved protocols for data privacy and community engagement."

Why this works:

Emphasizes collaborative methodology (participatory action research).

References authoritative benchmarks (WHO, CDC).

Highlights ethical rigor (IRB approval, community engagement).

6. Conclude with a Forward Pointer

Finally, close the section with a short line that signals where the detail will come later. This avoids overwhelming Chapter 1.

Summarise the direction and purpose of the project in one line.

Promise that design and evaluation details will appear in methodology/findings chapters.

- Keep it confident but brief.

Example

“The design, commercial feasibility, and empirical validation will be detailed in subsequent chapters.”

Why this works:

Prevents a methods dump in Chapter 1.

Maintains flow into Methodology/Implementation chapters.

EXAMPLE (Cybersecurity):

"The adaptive threat detection architecture, quantum-resistant implementation protocols, and cross-organizational validation results will be comprehensively examined in Chapters 3 and 4."

Why this works:

Specifies content (architecture, protocols, validation).

Clearly maps to later chapters (3 and 4).

Maintains concise, forward-looking tone.

EXAMPLE (Public Health):

"The integrated surveillance platform design, equity-centered deployment strategies, and outbreak prediction performance metrics will be systematically analyzed in the methodology and evaluation chapters."

Why this works:

Summarizes key deliverables (platform design, strategies, metrics).

Explicitly signposts locations (methodology and evaluation chapters).

Uses confident, academic language ("systematically analyzed").

Complete example (with mapping notes)(Toolkit)

Step 1 , Overall goal

“This project develops a semi-supervised regression model to address critical challenges in facial age estimation, focusing on mitigating data scarcity and demographic bias.”

Step 2 , Core aim

“The core goal is to create a robust system that accurately estimates age from facial images while minimizing reliance on exhaustively labeled datasets.”

Step 3 , Key features

“Key features include leveraging unlabeled data to enhance model generalization, implementing transfer learning techniques to adapt pre-trained neural architectures (e.g., ResNet, EfficientNet), and ensuring equitable performance across diverse age groups and ethnicities.”

Step 4 , Priorities

“This research firstly prioritizes data efficiency, reducing dependency on costly manual annotation by integrating semi-supervised learning (SSL) paradigms. Secondly, the research prioritizes bias mitigation, actively addressing performance disparities in underrepresented demographics through balanced training strategies. And lastly, the research prioritizes scalability, designing a framework adaptable to real-world applications like age-restricted access control and personalized healthcare.”

Step 5 , Management & validation

“Management follows a structured methodology, emphasizing iterative refinement of model architecture, validation against benchmark datasets (e.g., UTKFace, FG-NET), and ethical compliance.”

Step 6 , Forward pointer

“The design, commercial feasibility, and empirical validation will be detailed in subsequent chapters.”

3.4 Aims and Objectives

By this stage, the examiner already knows the background, the gaps, and the outline of your project. Now you need to pin down exactly what you’re trying to achieve and how you’ll break it into smaller, achievable steps. This is one of the most important parts of Chapter 1.

Think of it as a contract with your reader:

The aim is the promise of what you’ll achieve.

The objectives are the milestones that will deliver on that promise.

What it should include

One clear aim : broad enough to cover the whole project, but precise enough to guide direction.

A set of objectives: normally 4–6, each beginning with an action verb (“to collect…”, “to analyse…”).

Logical order: objectives should flow like a research roadmap.

Link to evaluation: make sure objectives are written so progress can be measured.

How to write it

Step 1 — State the overarching aim

Your aim is the big promise of your research. Keep it concise but strong — it must reflect the gap you identified earlier and give a clear direction.

Start with: “The aim of this research is to…”

Use verbs that show action and purpose (develop, design, investigate, evaluate).

Keep it broad enough to cover the project, but not so vague that it could apply to any study.

Example (Face-Age project):

“The aim of the research is to develop an accurate and scalable model for predicting human age from facial images by leveraging transfer learning and semi-supervised regression techniques, enabling the effective use of both labeled and unlabeled data to improve age estimation performance, particularly in scenarios where labeled datasets are limited.”

Why this works:

States the output (an age prediction model).

States the method (transfer learning + semi-supervised regression).

States the value (accuracy, scalability, improved use of data).

Targets a clear gap (limited labeled datasets).

EXAMPLE (Cybersecurity):

"The aim of this research is to design and validate an adaptive AI-driven framework that detects zero-day cyberattacks in real-time by integrating behavioral analytics with quantum-resistant encryption, reducing false positives by 70% while maintaining compatibility with legacy enterprise infrastructure."

Why this works:

Specifies the deliverable (adaptive AI framework).

Names the method (behavioral analytics + quantum encryption).

Quantifies targets (70% fewer false positives).

Addresses critical gaps (zero-day detection, legacy compatibility).

EXAMPLE (Public Health):

"The aim of this research is to develop and evaluate an integrated early-warning surveillance platform that unifies genomic, mobile, and environmental data to predict disease outbreaks 14 days earlier than current systems, while ensuring diagnostic equity across low-resource settings and reducing administrative burdens by 40%."

Why this works:

Defines the solution (integrated surveillance platform).

Specifies data sources (genomic, mobile, environmental).

Quantifies outcomes (14-day earlier prediction, 40% burden reduction).

Centers equity as a core goal (diagnostic equity).

Step 2 — Break it down into objectives

Objectives are the building blocks of your aim. They turn a broad vision into practical tasks. Each should be clear, specific, and measurable.

Write 4–6 objectives (enough to cover the aim, but not overwhelming).

Begin each one with “To…” followed by an action verb.

Cover the logical flow of your project: dataset → baseline → innovation → evaluation → comparison.

Example:

“The first objective involves collecting and preprocessing a dataset of facial images with age labels, ensuring the data is properly formatted and prepared for model training.”

Why this works:

Begins with a practical starting point (data).

Uses an action verb (“collecting and preprocessing”).

Ensures project feasibility (good data → good model).

EXAMPLE (Cybersecurity):

"The first objective involves compiling and annotating a dataset of zero-day attack patterns and network traffic logs, ensuring data represents diverse attack vectors and enterprise environments for model training."

Why this works:

Establishes foundational data needs (attack patterns, traffic logs).

Uses action verbs ("compiling and annotating").

Ensures relevance to real-world scenarios (diverse vectors, enterprise environments).

EXAMPLE (Public Health):

"The first objective involves aggregating and harmonizing genomic sequences, mobile symptom reports, and environmental sensor data from multiple sources, ensuring interoperability across data types for integrated analysis."

Why this works:

Addresses data integration challenges (multiple sources, data types).

Uses action verbs ("aggregating and harmonizing").

Ensures foundational readiness for cross-domain analysis.

Step 3 — Make objectives SMART-friendly

Your objectives don’t have to spell out SMART, but they should feel specific and achievable. Examiners want to see that they’re not vague promises.

Specific: each targets a single task (not bundled).

Measurable: include references to metrics, datasets, or benchmarks.

Achievable: realistic within your timeframe/resources.

Relevant: must connect directly back to your aim.

Example:

“The second objective focuses on implementing and evaluating pre-trained CNN models, such as ResNet and VGG, for feature extraction to leverage their existing knowledge from large-scale image recognition tasks.”

Why this works:

Introduces transfer learning as a logical next step.

Anchors the project in well-established architectures.

Sets up fair comparison with your SSL approach.

EXAMPLE (Cybersecurity):

"The second objective focuses on deploying and benchmarking quantum-resistant encryption algorithms against NIST standards, measuring cryptographic strength and computational overhead to establish a baseline for secure communication modules."

Why this works:

Specific: Targets a single task (deploying/benchmarking encryption).

Measurable: References NIST standards and metrics (cryptographic strength, overhead).

Achievable: Uses established algorithms and evaluation criteria.

Relevant: Directly supports the aim of quantum-resistant security.

EXAMPLE (Public Health):

"The second objective focuses on implementing and validating machine learning models for genomic data analysis using NCBI reference datasets, measuring prediction accuracy against WHO pathogen classification thresholds to establish diagnostic baselines."

Why this works:

Specific: Concentrates on genomic data modeling.

Measurable: Uses NCBI datasets and WHO thresholds.

Achievable: Leverages existing reference databases.

Relevant: Aligns with the aim of integrated outbreak prediction.

Step 4 — Order Objectives logically

Think of your objectives as stepping stones. When read in sequence, they should outline the flow of your project clearly.

Arrange them in a natural order (data preparation → model development → innovation → testing → evaluation).

Avoid overlaps — no two objectives should sound like they’re doing the same thing.

If needed, flag boundaries: what your objectives will do and what they won’t.

Example:

“The third objective aims to integrate semi-supervised learning techniques, including pseudo-labeling and consistency training, into the regression framework to effectively utilize both labeled and unlabeled data.”

Why this works:

Connects directly to your research novelty.

Highlights both methods (pseudo-labeling + consistency).

Shows how the project tackles the unused data problem from 3.2.

EXAMPLE (Cybersecurity):

"The third objective focuses on implementing and benchmarking baseline intrusion detection systems (e.g., Snort, Suricata) against MITRE ATT&CK framework metrics to establish performance thresholds for zero-day attack detection accuracy."

Why this works:

Specific: Targets a single task (implementing/benchmarking baselines).

Measurable: Uses MITRE ATT&CK framework metrics for evaluation.

Achievable: Leverages established tools (Snort, Suricata).

Relevant: Directly supports the aim of zero-day detection.

EXAMPLE (Public Health):

"The third objective focuses on deploying and validating existing outbreak prediction algorithms (e.g., EpiFast, GLEAM) against WHO Global Health Observatory data to establish baseline performance for early-warning timeliness and geographic coverage."

Why this works:

Specific: Focuses on deploying/validating existing algorithms.

Measurable: Uses WHO datasets and metrics (timeliness, coverage).

Achievable: Uses established tools (EpiFast, GLEAM).

Relevant: Supports the aim of improving outbreak prediction speed.

Step 5 — Ensure alignment with your research design

Every objective should map to something in your methodology and contribute to answering your research question.

Check that each objective directly relates to your research aim and RQ.

Make sure they connect to the gap identified earlier (not side issues).

Use consistent wording so objectives echo terms used in your aim and project description.

Example:

“The fourth objective is to analyze model performance using established metrics like Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) to quantitatively assess prediction accuracy.”

Why this works:

Names specific metrics (MAE, RMSE).

Ensures evaluation is objective and quantifiable.

Keeps the aim of accuracy grounded in evidence.

EXAMPLE (Cybersecurity):

"The fourth objective is to evaluate framework effectiveness using MITRE ATT&CK technique coverage rates and false positive reduction percentages to quantitatively measure zero-day threat detection performance."

Why this works:

Specific metrics: Uses MITRE ATT&CK coverage rates and false positive percentages.

Quantifiable assessment: Focuses on measurable outcomes (coverage rates, percentages).

Alignment: Directly supports the aim of zero-day detection and false positive reduction.

EXAMPLE (Public Health):

"The fourth objective is to assess platform performance using WHO outbreak prediction timeliness metrics and health equity indices to quantitatively measure early-warning capability and diagnostic accuracy across demographics."

Why this works:

Specific metrics: References WHO timeliness metrics and equity indices.

Quantifiable assessment: Measures both speed (timeliness) and fairness (equity).

Alignment: Directly ties to the aim of earlier prediction and equitable health outcomes.

Step 6 — Present clearly and link forward

Clarity in presentation is key — examiners should be able to tick off your objectives as they read your later chapters.

Write the aim in 1–2 sentences (short paragraph).

List objectives as numbered items (never bury them in prose).

If helpful, add a short success note (e.g., “measured using MAE/RMSE”).

End with a bridge sentence to Research Question & Novelty (e.g., “Together, these objectives operationalise the aim and ground the research question explored next”).

Example:

“Finally, the fifth objective involves comparing the developed model against baseline supervised regression models to demonstrate the relative improvements and advantages of the proposed transfer semi-supervised approach.”

Why this works:

Provides closure to the objectives list.

Frames results in terms of relative improvement.

Strengthens your claim of contribution.

EXAMPLE (Cybersecurity):

"Finally, the fifth objective involves benchmarking the adaptive AI framework against industry-standard intrusion detection systems (e.g., Darktrace, CrowdStrike) to quantify improvements in zero-day detection speed and reduction in false positive rates."

Why this works:

Uses comparative language ("benchmarking against") to show validation.

Names industry leaders (Darktrace, CrowdStrike) for credibility.

Quantifies improvements (detection speed, false positive rates).

Sets up contribution claims for the novelty section.

EXAMPLE (Public Health):

"Finally, the fifth objective involves comparing the integrated surveillance platform against existing early-warning systems (e.g., ProMED, HealthMap) to validate improvements in outbreak prediction timeliness and diagnostic equity across diverse populations."

Why this works:

Explicitly states comparative validation ("against existing systems").

References established platforms (ProMED, HealthMap) for context.

Focuses on project-specific metrics (timeliness, equity).

Creates natural transition to research question about innovation.

Quick writing tips for students

Only one aim , keep it clean.

Objectives start with verbs: collect, implement, integrate, analyse, compare.

Logical order: think “data → baseline → innovation → evaluation → comparison”.

SMART check: can each objective be achieved and evaluated?

Word count tip: 1 aim (50–70 words), 4–6 objectives (each 1–2 sentences). Total ~250–350 words.

3.4 Aims & Objectives , Paragraph Mapping(Toolkit)

Step 1 , Aim (overall research goal)

“The aim of the research is to develop an accurate and scalable model for predicting human age from facial images by leveraging transfer learning and semi-supervised regression techniques, enabling the effective use of both labeled and unlabeled data to improve age estimation performance, particularly in scenarios where labeled datasets are limited.”

This is the single overarching aim: develop a scalable, accurate model using transfer + semi-supervised regression.

Step 2 , Objective 1 (Data preparation)

“The first objective involves collecting and preprocessing a dataset of facial images with age labels, ensuring the data is properly formatted and prepared for model training.”

Foundation step , ensures the dataset is reliable and usable.

Step 3 : Objective 2 (Transfer learning baseline)

“The second objective focuses on implementing and evaluating pre-trained CNN models, such as ResNet and VGG, for feature extraction to leverage their existing knowledge from large-scale image recognition tasks.”

Establishes a baseline using well-known models (ResNet, VGG).

Step 4 : Objective 3 (Semi-supervised integration)

“The third objective aims to integrate semi-supervised learning techniques, including pseudo-labeling and consistency training, into the regression framework to effectively utilize both labeled and unlabeled data.”

Introduces the novel contribution (semi-supervised learning integration).

Step 5 , Objective 4 (Performance evaluation)

“The fourth objective is to analyze model performance using established metrics like Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) to quantitatively assess prediction accuracy.”

Anchors the research in measurable, quantitative evaluation.

Step 6 : Objective 5 (Benchmark comparison)

“Finally, the fifth objective involves comparing the developed model against baseline supervised regression models to demonstrate the relative improvements and advantages of the proposed transfer semi-supervised approach.”

Provides closure by proving added value compared to baselines.

3.5 Research Question and Novelty

Step 1 : Present the main research question

Purpose):

Your research question anchors the whole dissertation. It must be clear, researchable, and directly tied to the gap you identified earlier. Examiners look here to judge: is this a strong enough question to justify a full project?

What to write:

Phrase it as one clear question.

Include your approach and outcome in the wording.

Example (Face-Age):

“This research addresses the critical question: How can pseudo-labeling techniques integrated with transfer learning in a semi-supervised regression framework effectively leverage both labeled and unlabeled facial images to achieve statistically significant improvements in age prediction accuracy compared to conventional supervised approaches?”

Why this works:

It’s specific (names pseudo-labeling, transfer learning, semi-supervised regression).

It’s measurable (asks if accuracy improves vs supervised baselines).

It’s relevant (directly linked to the data scarcity/unlabeled data gap in 3.2).

It sets a testable challenge rather than a vague theme.

Step 2 : Add sub-questions (if needed) Optional, but sub-questions help break down complex projects. They keep your study structured and make it easy to track whether objectives have been met.

What to write:

Use 2–3 sub-questions that map directly to your objectives.

Keep them short and practical.

Example (Face-Age):

What role do pre-trained CNNs (ResNet/EfficientNet) play in improving feature extraction for age prediction?

How does pseudo-labeling improve utilization of unlabeled datasets?

To what extent does the proposed framework reduce demographic bias compared to existing models?

Why this works:

Each sub-question ties to an objective (feature extraction, unlabeled data, fairness).

They clarify the research roadmap.

They prevent the project from drifting away from the main question.

Step 3 : State the novelty claim

Examiners expect you to clearly state what is new. Here, you differentiate your study from existing work. Novelty can be a new combination, a new dataset, or applying known methods in a new way.

What to write:

Identify 2–3 elements that haven’t been combined or tested together.

Show how this is different from current literature.

Example (Face-Age):

“The novelty lies in pioneering a unified framework that synergistically combines three underexplored elements: (1) pseudo-labeling to harness abundant unlabeled facial images, reducing costly manual annotation (Ma et al., 2024); (2) transfer learning via pre-trained CNNs such as ResNet and EfficientNet, leveraging large-scale image knowledge (Randellini, 2023); and (3) semi-supervised regression with a dedicated regression head for continuous age prediction (He et al., 2022), enhanced by consistency regularization (Jo, Kahng and Kim, 2024).”

Why this works:

It breaks novelty into three concrete innovations.

It cites literature to show these are genuine gaps, not generic claims.

It explains why each element matters (reduce labels, use strong feature extractors, stabilize predictions).

It frames novelty as a framework, not just a trick.

Step 4 : Explain why the novelty matters (value) Novelty must connect to value , why your contribution matters for theory, practice, ethics, or industry. Without value, originality feels trivial.

What to write:

Link originality to the gaps named in 3.2.

Show real-world benefits (accuracy, fairness, cost-effectiveness, scalability).

Example (Face-Age):

“This integration directly tackles the research gap identified by Bekhouche et al. (2024) and Dwivedi et al. (2021): current models fail to jointly optimize labeled/unlabeled data utilization for applications like security, content filtering, and demographic analytics. By validating on benchmarks (UTKFace/FG-NET) using MAE and RMSE, the work delivers a cost-effective, scalable solution that reduces dependency on labeled data while improving accuracy across diverse real-world scenarios.”

Why this works:

It ties novelty to published gaps (data scarcity + unlabeled waste).

It shows practical applications (security, content filtering, analytics).

It uses benchmark datasets + metrics to prove rigour.

It frames value in academic, commercial, and social terms.

Step 5 : Conclude with a forward pointer Keep the introduction lean. You don’t need to prove novelty fully here , just preview it and point the reader to later chapters for validation.

What to write:

One line promising design/testing will show the novelty in action.

Example (Face-Age, implied):

“The design, evaluation, and implications of this novel framework will be examined in detail in the methodology, findings, and conclusion chapters.”

Why this works:

It avoids info-dumping in Chapter 1.

It reassures the reader that novelty will be backed by evidence later.

It maintains flow into the next section.

3.5 Research Question & Novelty , Paragraph Mapping

Step 1 , Main Research Question

“This research addresses the critical question: How can pseudo-labeling techniques integrated with transfer learning in a semi-supervised regression framework effectively leverage both labeled and unlabeled facial images to achieve statistically significant improvements in age prediction accuracy compared to conventional supervised approaches?”

Anchors the project with one clear, testable question.

Step 2 , (Optional) Sub-questions

(Not in your draft, but could be added for clarity , e.g.):What role do pre-trained CNNs play in improving feature extraction?

How does pseudo-labeling improve utilization of unlabeled data?

To what extent does the framework reduce demographic bias?

Breaks the big question into smaller, structured parts that align with objectives.

Step 3 , Novelty Claim

“The novelty lies in pioneering a unified framework that synergistically combines three underexplored elements: (1) pseudo-labeling to harness abundant unlabeled facial images from social media and digital repositories, addressing the costly, time-consuming burden of manual annotation highlighted by Ma et al. (2024); (2) transfer learning via pre-trained CNNs (ResNet/EfficientNet) as feature extractors (Randellini, 2023), leveraging their ability to capture generalizable facial features from massive datasets; and (3) semi-supervised regression with a dedicated regression head for continuous age prediction (He et al., 2022), enhanced by consistency regularization to stabilize predictions under augmentations (Jo, Kahng and Kim, 2024).”

Defines novelty as three specific, concrete contributions backed by citations.

Step 4 , Why the Novelty Matters (Value)

“This integration directly tackles the research gap identified by Bekhouche et al. (2024) and Dwivedi et al. (2021): current models fail to jointly optimize labeled/unlabeled data utilization for applications like security, content filtering, and demographic analytics. By validating on benchmarks (UTKFace/FG-NET) using MAE/RMSE, the work delivers a cost-effective, scalable solution that reduces dependency on labeled data while improving accuracy across diverse real-world scenarios.”

Shows novelty has theoretical, practical, and commercial value.

Step 5 , Concluding Forward Pointer

(Implied, but you can add a sentence for flow):“The design, validation, and broader implications of this novel framework will be examined in detail in the methodology, findings, and conclusion chapters.”

Keeps the introduction concise and points to later sections for full validation.

3.6 Feasibility, Commercial Context, and Risk

What this section is

This part demonstrates that your project is realistic to carry out and has relevance in the real world. Examiners want to see that you’ve thought about:

Feasibility → Is the project technically doable with the resources you have?

Commercial & economic context → Does the solution matter to industry, businesses, or society?

Risks → What challenges could threaten your project or adoption, and how will you mitigate them?

Think of this section as a credibility check: you’re proving that your work is both achievable and meaningful outside academia.

What it should include

Technical feasibility → tools, frameworks, datasets you’ll use.

Commercial/economic potential → industries that would benefit, cost savings, scalability.

Risks → technical, ethical, market, or regulatory challenges.

Mitigation strategies → how you plan to handle those risks.

Forward pointer → state that risks and feasibility will be analysed further in your evaluation chapter.

How to write it

Step 1 — Demonstrate technical feasibility

Here you reassure the reader that your project is actually doable with available tools, datasets, and resources.

Name the frameworks, platforms, or models you’ll use.

Mention the datasets or benchmarks that support the work.

Highlight any early evidence of viability (e.g., cost savings, performance gains).

Example (Face-Age):

“This project shows strong technical viability by using proven tools like PyTorch and ResNet-50 on benchmark datasets like UTKFace, achieving a 60% reduction in annotation costs and enabling deployment on resource-constrained devices.”

Why this works:

Cites specific tools and datasets (credible, realistic).

Mentions a quantified benefit (60% cost reduction).

Shows deployability beyond lab settings (resource-constrained devices).

EXAMPLE (Cybersecurity):

"This project demonstrates technical feasibility through established frameworks like TensorFlow Federated and MITRE ATT&CK datasets, achieving 40% faster threat detection in preliminary tests while maintaining compatibility with existing enterprise security infrastructure."

Why this works:

Names industry-standard tools (TensorFlow Federated) and datasets (MITRE ATT&CK).

Quantifies performance gain (40% faster detection).

Addresses integration concerns (compatibility with existing infrastructure).

EXAMPLE (Public Health):

"This project confirms technical viability using WHO Global Health Observatory datasets and Apache NiFi data pipelines, reducing outbreak prediction processing time by 65% in pilot tests while enabling integration with low-resource clinic information systems."

Why this works:

Leverages authoritative data sources (WHO datasets) and tools (Apache NiFi).

Quantifies efficiency improvement (65% faster processing).

Addresses accessibility constraints (integration with low-resource systems).Step 2 — Show practical achievability

Examiners want to know that your project can be completed within the scope of a dissertation/thesis.

Note that your design is manageable within the timeframe.

Show you have access to necessary data/resources.

Keep expectations realistic and bounded (not “solving everything at once”).

Example (Face-Age):

“Commercially, the solution targets high-demand markets such as social media age verification, healthcare triage, and retail analytics, projecting annual savings of $2.1 million for businesses.”

Why this works:

Lists specific industries → applied value is clear.

Quantifies financial impact → strong credibility.

Links the research to pressing, high-demand contexts.

EXAMPLE (Cybersecurity):

"This project is achievable within a 12-month timeline using existing university cloud infrastructure and accessible MITRE ATT&CK datasets, focusing specifically on financial services and healthcare sectors where breach prevention ROI exceeds $6M annually."

Why this works:

Explicitly states timeframe feasibility (12 months).

Confirms resource access (university cloud, MITRE datasets).

Binds scope to high-impact sectors (financial services, healthcare) with ROI justification.

EXAMPLE (Public Health):

"The platform can be developed within 18 months using open-source frameworks and WHO public data repositories, prioritizing implementation in regional hospitals where outbreak response delays cost $1.4M per incident in preventable healthcare expenditures."

Why this works:

Sets realistic development timeline (18 months).

Verifies data/tool accessibility (open-source frameworks, WHO repositories).

- Focuses on bounded scope (regional hospitals) with quantified impact ($1.4M per incident).

Step 3 — Highlight commercial and economic relevance

Go beyond feasibility and show why this project matters outside academia. Link your work to industries, markets, or end-users.

Name the sectors or industries where your work applies.

Mention financial benefits (savings, efficiencies, scalability).

- Connect to current market demand or societal needs.

Example (Face-Age):

“However, key risks include demographic bias affecting underrepresented groups, stiff competition from established players like Amazon Rekognition, and regulatory hurdles concerning privacy laws like GDPR and CCPA.”

Why this works:

Covers three categories of risk (ethical, market, legal).

Cites real competitors (Amazon Rekognition).

Shows awareness of global regulation pressures.

EXAMPLE (Cybersecurity):